Research Interest

My research primarily explores the synergy between deep learning and statistics, focusing on three main areas:

1) Deep Learning for Modeling Uncertainty:

In complex systems, like weather forecasting, predictions are inherently uncertain. In deep learning, this uncertainty, known as aleatoric uncertainty, is captured by outputting probability distributions instead of single values. We contribute to this field by developing deep transformation models allowing flexible output distributions and extended the method to include interpretable traditional statistical regression models. We applied these models to practical problems like predicting the day ahead power consumption or tomedical applications.

2) Quantifying Uncertainty in Deep Learning Models:

Recently, deep neural networks have become standard in many areas. However, they can be spectacularly wrong.

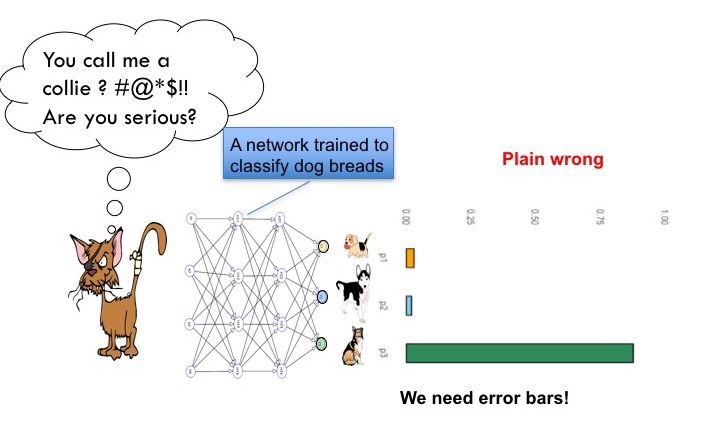

Consider the case where a network should classify an image of a class it has never seen before. Technically speaking, the image class is outside the training set. This is shown in the following picture, where a network trained on dogs is shown a cat.

The network overconfidently assigns a high probability to the wrong class. I get scared when I think of self-driving cars being overconfident. This kind of uncertainty is also referred to as epistemic uncertainty. Standard networks cannot state the uncertainty of a prediction. Bayesian Statistics would be a perfect framework to include this kind of uncertainty. However, they are computationally expensive, so approximations are very important. To overcome this challenge, we have contributed to the field by developing novel approaches such as variational inference models for flexible posterior distributions (Bernstein Flows for Flexible Posteriors in Variational Bayes), even faster approximations to existing Bayesian approximations (single shot dropout), more efficient approximations such as single-shot dropout, and subspace inference methods enabling MCMC sampling in low-dimensional proxy spaces within the vast network parameter space (Bayesian Semi-structured Subspace Inference). We applied these methods in various fields to develop to practical applications. In high content screening robust deep learning approach in the presence of unknown phenotypes, medical applications (Deep transformation models for functional outcome prediction after acute ischemic stroke and Integrating uncertainty in deep neural networks for MRI based stroke analysis).

The network overconfidently assigns a high probability to the wrong class. I get scared when I think of self-driving cars being overconfident. This kind of uncertainty is also referred to as epistemic uncertainty. Standard networks cannot state the uncertainty of a prediction. Bayesian Statistics would be a perfect framework to include this kind of uncertainty. However, they are computationally expensive, so approximations are very important. To overcome this challenge, we have contributed to the field by developing novel approaches such as variational inference models for flexible posterior distributions (Bernstein Flows for Flexible Posteriors in Variational Bayes), even faster approximations to existing Bayesian approximations (single shot dropout), more efficient approximations such as single-shot dropout, and subspace inference methods enabling MCMC sampling in low-dimensional proxy spaces within the vast network parameter space (Bayesian Semi-structured Subspace Inference). We applied these methods in various fields to develop to practical applications. In high content screening robust deep learning approach in the presence of unknown phenotypes, medical applications (Deep transformation models for functional outcome prediction after acute ischemic stroke and Integrating uncertainty in deep neural networks for MRI based stroke analysis).

3) Merging Deep Learning with Statistical Models:

While deep learning is exceptional in identifying complex patterns, statistical models offer superior interpretability. Our research aims to merge these two worlds, creating models that are both powerful and understandable. This approach is evident in most of our published works, where we seamlessly integrate deep learning with statistical insights.